ARM Data Flow: A Look Back

Published: 19 September 2016

From its inception in 1990, the Atmospheric Radiation Measurement (ARM) Climate Research Facility has focused on four topics: science, instruments, sites, and data.

Over time, the science focus evolved, expanding from radiative transfer to atmospheric processes and then to improved parameterizations for models. Instruments got more robust and numerous. The number of sites grew; ARM added mobile sites and aircraft measurement platforms.

The ARM data life cycle evolved too, but the data imperative never changed: Provide continuous observational data of “known and reasonable quality” to help scientists improve their atmospheric process models.

The history of ARM is admirably detailed in the retrospective monograph published online this spring by the American Meteorological Society. Here are a few data history highlights, culled from “The ARM Data System and Archive” chapter:

1990-1993

The data system is divided into three logical groups, which still remain: site systems, processing facility, and archive.

1992-1997

ARM expands from a few instruments at one location, Southern Great Plains, to several facilities at the same location. Two other sites come online: Tropical Western Pacific, now closed, and North Slope of Alaska.

ARM first planned a single unified data system. That changed to one based on multiple single-source datastreams, each from a single instrument or algorithm.

ARM adopts the Network Common Data Form (NetCDF) file structure and decides to archive both raw and processed data. Original “real-time” intention becomes a “near-real-time,” with data generally available in two days.

As the volume of data grows, ARM adopts new approaches, including automated quality control “flags” for data values that are too high, too low, or erratic; automated quality measurement experiments; and manually entered data quality reports (DQRs) to capture events, instrument conditions, and operating conditions. DQRs now serve as “companion” information provided to researchers when they request data.

ARM changes from “pushing” data to researchers to having them “pull” the data they need.

Advances in the web allow for a dynamic selection of data, customized lists of data products and ranges, massively scaled up archives, and improved organizational structure.

1998-2007

ARM continues to expand, increasing the number of instruments and adding new capabilities. Conversely, to more efficiently and effectively manage the data from the expanded instrument suite, data management enters an era of consolidation.

The focus shifts from sites to science themes. A new programmatic structure focuses on common practices for site data systems, software, quality review, and data products. By 2000, the new Data Management Facility (DMF) opens, where ARM consolidates production-scale processing of data and software development.

ARM standardizes data systems and instrument computers. It opens the Data Quality Office in 2000, and by 2001 introduces quality control (QC) “flags.” DQRs are restructured into portable web pages. The first attempts are made at a “quality color” scheme (red, yellow, and green), based on QC flags and DQRs.

Data reprocessing moves from site data systems to the DMF, where reprocessing is formalized and more robust algorithms are applied. To create better value-added products (VAPs), ARM pairs software developers with “translators,” infrastructure scientists who can interpret scientific algorithms and review interim results.

In 2004, ARM becomes a national scientific user facility. A new database helps maintain consistency in the design of ARM data products. In 2006, ARM defines and adopts a new metadata structure. To accommodate growing data products from field campaigns, ARM develops new data design principles.

ARM adopts a new query logic to give researchers hierarchical summaries of data availability. A new web interface provides precomputed thumbnail data plots that allow users to customize displays of specific measurements from multiple data products.

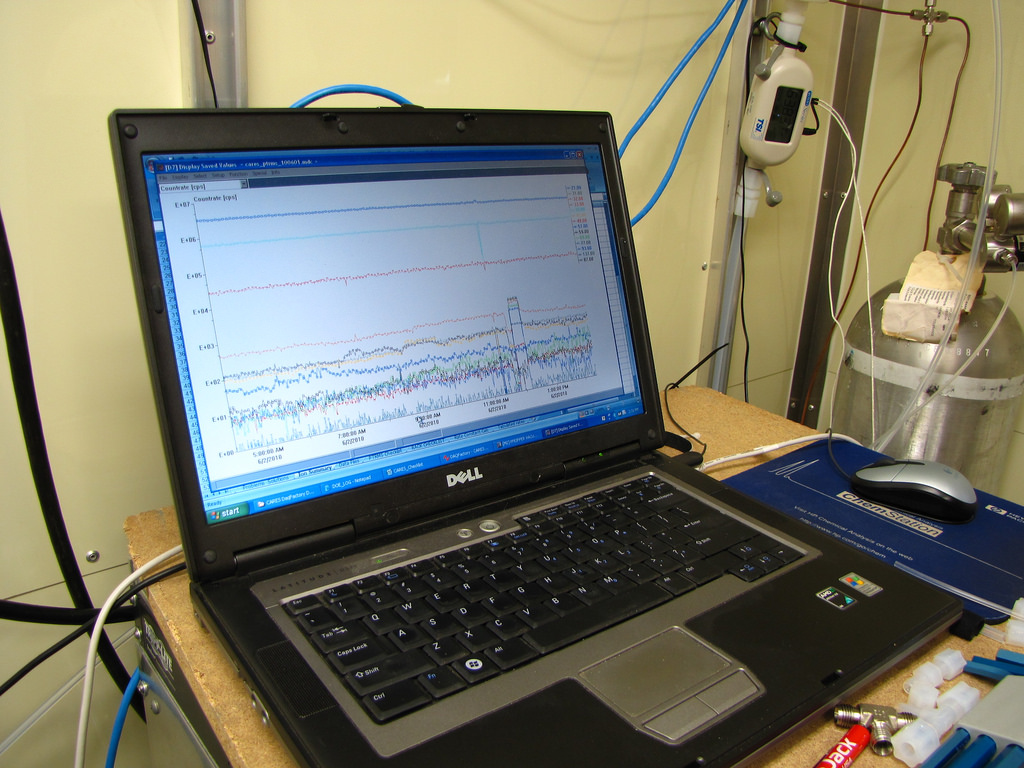

ARM develops an NCVweb tool so users can interactively plot small amounts of NetCDF data, and zoom in on measurement details.

Starting in 2007, ARM develops model-ready, condensed, very specialized “showcase” data products. They include logic to replace missing values and create “best-estimate” products.

That same year, ARM deploys its first mobile facility, an expansion of instrumentation that will add to the growing volume of data.

2008-present

ARM adds new sites and many new instruments, and data volume grows fast. Data systems get more robust and nimble.

ARM adds a second mobile facility in 2008-2010, and a third in 2012-2014. A new fixed site in the Azores goes online in 2012-2014.

ARM acquires many new instruments during the 2009-2011 Recovery Act funding surge. New scanning instruments add new data products and new demands for storage. The daily cloud radar data set alone increases five-fold, from 20GB to 100GB. Outgoing data volume increases 10-fold and incoming 120-fold.

Data storage increases, along with data access. In 2012, ARM moves its DMF hardware from Pacific Northwest National Laboratory to Oak Ridge National Laboratory, combining network and storage devices with those at the ARM Data Archive.

ARM adds an integrated software development environment, providing standardized software tools for common software tasks.

ARM develops a data standards document. In 2012, it improves its metadata, including a means to add field campaign metadata.

With the addition of very large data sets from new instruments following the Recovery Act, ARM creates a new computing cluster. That adds very large amounts of shared memory and tens of terabytes of storage. ARM installs new, popular scientific software systems.

In October 2014, an ARM “Decadal Vision” report includes a section on enhancing data products and processes. The issues: bridging observations and models, improving discoverability, better communication of data quality and uncertainty, linking data to citations, ensuring security and stability of ARM data and software, and integrating ARM data with other climate measurements and simulations at the U.S. Department of Energy’s Office of Biological and Environmental Research.

In September 2015, ARM announces the design of the Large-Eddy Simulation (LES) ARM Symbiotic Simulation and Observation (LASSO) workflow. The two-year prototype strategy, starting with modeling shallow convection at the Southern Great Plains fixed site, will link a routine, high-resolution modeling capability with ARM’s high-density observations. “This new modeling ability,” the strategy document states, “will play a pivotal role in realizing the full potential of ARM observations.”

# # #

The ARM Climate Research Facility is a national scientific user facility funded through the U.S. Department of Energy’s Office of Science. The ARM Facility is operated by nine Department of Energy national laboratories.

The ARM Climate Research Facility is a DOE Office of Science user facility. The ARM Facility is operated by nine DOE national laboratories, including .

Keep up with the Atmospheric Observer

Updates on ARM news, events, and opportunities delivered to your inbox

ARM User Profile

ARM welcomes users from all institutions and nations. A free ARM user account is needed to access ARM data.